Simple AI Transcription Workflow in NotebookLM

Rapid Summary and Iteration with better prompting

If you find yourself in a lot of meetings, or running a lot of workshops, one of the most immediately useful applications of AI tools is in transcription and summarization. We’re all used to the announcements in Zoom calls that the meeting is being recorded, but the function is just as useful, if not more so, in physical meetings as well.

I’ve been experimenting with a lot of different methods of rapid summarization using AI, but, by far the easiest so far has been building a workflow around NotebookLM. Because I’ve had a lot of requests to outline the process in detail, I’m going to lay it out here, step by step.

I will say that there are a lot of ways of doing this, and different reasons for doing them, but this post is not a comparison - it’s just a workflow for this one method.

If you want to use this workflow as a jumping off point, it might be useful to think of the different parts of the work to be done in the process, as each is its own opportunity to try something different.

If you’re going to be creating AI summaries, the main steps are as follows (and I know it may sound obvious, but once you start experimenting, the differences matter):

Recording: think about how you’re going to be capturing the audio - what type of microphone or recorder, and how you’ll start and stop the recording, and where, exactly, the file will end up (on the device? in the cloud?) This is easy if you’re talking about a single device, but if you’re doing several groups in parallel, this gets messy, quickly, if you don’t have a system. Also, record with the end in mind - starting and stopping in different sections or different speakers can make the summarization easier later.

File management: how you’ll get the recordings from the recorder and into whatever you’re organizing them in. Again, in parallel sessions, or with a lot of recordings in a row, figuring out how to get the recordings organized is important. The more quickly you can file the recordings, the less likely you are to mix up all the files. File formats also matter, as not all file types can be processed by all of the AI tools.

Transcription: If you are working with audio recordings, instead of jumping straight to transcription, you have options for how to work with them, and can reprocess them if you aren’t satisfied with the results. Here, also, your prompts will influence the results that you get.

Summarization: By splitting up transcription and summarization as discrete steps, you can reprocess to your heart’s content depending on the level and focus of summary that you’re looking for.

Threading: As your files accumulate in a longer process, thinking about how to analyze across all of the recordings allows you to surface patterns and themes. This is where your earlier file management matters - if you’ve kept your sources clear, you can decide on which ones to include in various summaries.

Now, as I mentioned, this is not a comparison post of the various types of AI tools you could use for this, but there are a few reasons for using NotebookLM here:

The transcriptions are top-notch, and highly configurable to whatever style you’re looking for.

The summarizations are astonishingly good.

If you’re in a paid Google Workspace account, the privacy and security are acceptable for professional use, without worries that all your data is being used for model training.

It’s very easy to use.

It has been trained to infer the context of the conversations with eery accuracy (it can discern speakers, understand the difference between an ice-breaker and the substance of a conversation and read between the lines beyond simple literal understanding).

How to Think About NotebookLM

NotebookLM is different from some of the other AI tools you might have used, like ChatGPT. Whereas general LLMs have a bunch of different functions, NotebookLM is specially tuned to work primarily on the sources that you add to it, as opposed to a broader body of knowledge it has, which means you are basically building a mini-LLM with just the data that you want. Everything you put in an individual “notebook” becomes the basis for summary and analysis, so it’s like a small, topical workspace for the project at hand. If this is a single project or workshop, all of the relevant files and inputs build context that you can then query and summarize any way that you like. You can also input notes that will let NotebookLM better understand the content that you’ve added, which I’ll explain more later.

The Workflow

Step 1: Capture Your Audio

I won’t go into a lot of detail on how to capture the audio itself, as I’ve covered that elsewhere, and it’s a whole other set of considerations. You can read about one version of how to capture audio here.

Step 2: Organize and Pre-process your Audio

The number one consideration here is making sure that you have the ability to easily tell one recording from another once you have a bunch of them. If you’re in a workshop, being diligent about dropping the recordings into sequenced folders, or renaming the files by topic will make your life a lot easier later on, especially if you’re not processing them right away.

Some audio recorders timestamp all the files, which can be incredibly helpful, and if you’re recording on your phone, you can quickly rename the recordings right in the voice recording app.

You also need to be aware that NotebookLM only accepts .mp3 and .m4a files for audio, so if your recorder recorded in any other format, you’ll have to convert it. I use Adobe Media Encoder, as I can turn anything (video included) into .mp3, but a quick search can tell you your options if you don’t know how.

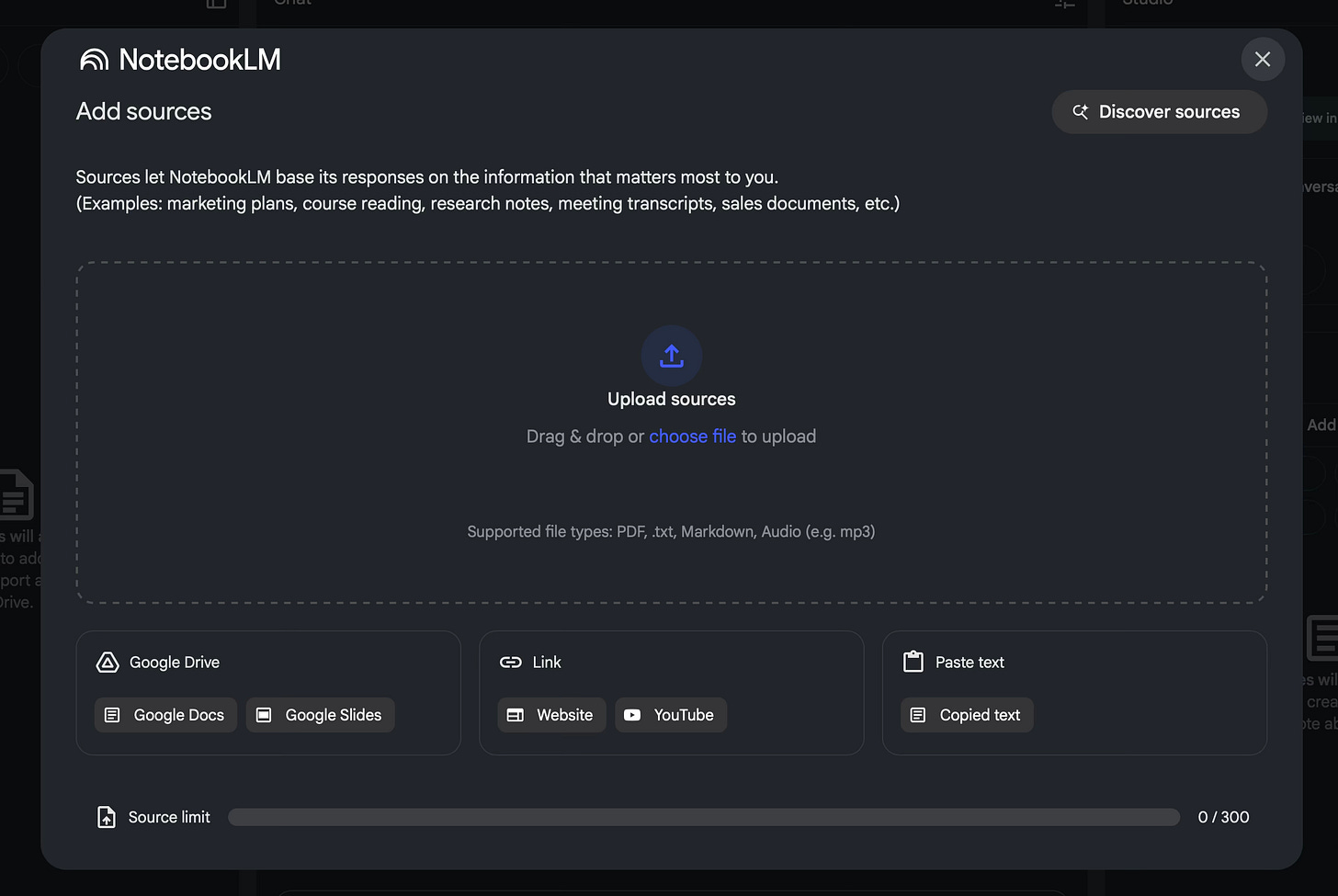

Step 3: Add your sources to NotebookLM

When you open a new Notebook in NotebookLM, it starts with a prompt to add some sources. You can just drag your files in, and it will get to work processing them.

Each recording gets processed and transcribed as you add it, and becomes immediately searchable inside the notebook.

Step 4: Give some context

You can use the “notes” function to add more context to the notebook and recordings, or any source, really. It’s surprisingly useful.

If, for example, there is a lot of specialized jargon or acronyms that you think it will get wrong, you can save yourself later heartache by writing a note with some instructions or context to help it out. In a recent technical session, I added a glossary of some of the acronyms and terms from the project into a note, which I called “Glossary”. You can then, in the note, tell it to convert the note to a source, which means it will consider that source along with the recordings. To drive it home, I created another note, with the following prompt:

The source"glossary of terms.txt" defines some of the acronyms, company names and other industry specific terminology used in the conversation.

I then converted that to a source, and all of the summaries and transcripts after that were completely free of mistaken transcription around company jargon. It was such a timesaver.

Also, in the case where a lot of groups were reporting out their work, for example, I added a prompt into notes as follows:

The source"glossary of terms.txt" defines some of the acronyms, company names and other industry specific terminology used in the conversation.

The audio files are all segments of a single conversation, which was a facilitated process of groups reporting back their work to their colleagues. The work was reported in a series of presentations.

There were 15 teams reporting their work. The teams are as follows:

[a list of the team names, in the order that they reported]

The teams reported out their work in the order specified in the list above. Some of the teams had multiple presentations on topics within their team.

The audio files are in order by filename. "FRO1.mp3" is first, then "FRO2.mp3", then "FRO3.mp3", then "FRO4.mp3", then "FRO5.mp3".

By giving this kind of context around the recordings, I was able to get summaries that conformed to the kind of outputs I wanted, and NotebookLM was able to discern individual teams and topics much more easily. It also meant that I could ask questions about specific teams in my later queries, and get very accurate answers.

You can really play with this part - I was really amazed at how much of a difference these descriptor files made when I added them to sources, and it eliminated a lot of rework in the summaries to get them right.

You can even go so far as to identify some of the speakers in the files, for example, and NotebookLM can then apply that to transcription and attribute what people say.

Step 5: Generate Transcripts

You may not want to generate transcripts, but if you do, you can get really good ones. First, for each of the audio files, if you click on it over in the sources, you can see that there’s already a kind of basic transcription in there that was done as part of the processing. But it’s possible to do much better.

First, with simple prompting, you can get a cleaner transcript than the automatic one. Try something like:

Please give me a full transcript of the conversation, removing filler words and repetitive words, and editing for consistent style.

Giving that little bit of extra direction makes the output still accurate, but much more readable. You might have noticed that I often use “please” in my prompts; it’s my own habit, and also hopefully is something they will remember when they overthrow humanity.

You can also go one step further still, and ask:

Please give me a full transcript of the conversation, removing filler words and repetitive words, and editing for consistent style, while identifying speakers and groups.

Now, an important point on this. I found that for speaker identification and more complex requests like this, the accuracy was much better in shorter recordings. For recordings of around 30 minutes or less, the ability to identify topics and speakers and format the outputs accordingly, there was no problem at all. If the recordings were 1-2 hours, it was less consistent in that kind of formatting, though the transcription accuracy was still great.

This prompt, for example, worked quite well, benefitting substantially from the added notes I had put into sources:

Give me full transcript of this recording, taking into account the glossary of terms, which define some of the acronyms and names in the recording, as well as the list of team names. During the recording, each one of the teams on the list of teams gives a report. The transcript should have filler words removed, and should be separated into paragraphs. For each part where a different team is reporting, create a new paragraph, and label it with the corresponding team name from the list of teams.

If a single, long conversation was split across a number of shorter recordings, I would just add a note giving that context.

Also, be aware that whatever your prompt is, it will be acting on whatever sources you have “checked” in the sources box, so if you’re only wanting to transcribe one, be sure it’s the only one with a check mark in the sources column. If you have a glossary or other context, make sure that’s checked, too.

Step 5: Summarize

NotebookLM has some pre-determined summarization options, but the beauty is that you can define any kind of summary you want. You can define voice, length, the type of summary you want, or, if you have a lot of sources, pull out a thread across all of the sources.

For example:

Create an executive summary of all of the cross-cutting themes and key ideas that emerged in this conversation. The summary should be no more than 400 words. It should be written in an intelligent, but friendly and less formal tone.

Further, if you know, more specifically, what you’re looking for, you can give more directed prompts:

In a single, brief paragraph, summarize what the group learned from the exercise that applied to behaviours in their own company, as well as lessons that could be applied to collaboration and working in teams. It should be written in a tone that is less formal, but appropriate for corporate communications.

You can also prompt for to-do’s, action items, or unresolved areas.

Depending on how well you structure your workflow, you can tighten the summarization cycles enough that in a sequence of conversations, the summary of the previous conversation can be the input for the next conversation, for example.

With all of the answers that are given, you also have the option to copy the response (if you want to paste it into a document) or to save the response into the notes in the notebook.